- Homepage /

- Blog /

-

AI Observability in Production: Monitoring, Anomaly Detection, and Feedback Loops for Smart Applicat

AI Observability in Production: Monitoring, Anomaly Detection, and Feedback Loops for Smart Applicat

AI Observability in Production: Monitoring, Anomaly Detection, and Feedback Loops for Smart Applications

1. Introduction

AI has left the lab.

Machine learning models now power real-time decisions in marketplaces, fintech apps, logistics platforms, and personalization systems. But once deployed, AI behaves differently — data changes, user behavior evolves, and unseen variables creep in.

Unlike traditional software, AI systems don’t have binary logic. They operate probabilistically, meaning even small data shifts can lead to silent errors.

The question is no longer “Can we build AI?” but “Can we trust it in production?”

That’s where AI observability comes into play — the new frontier in MLOps and DevOps engineering.

2. What Is AI Observability?

AI observability refers to the ability to understand, monitor, and diagnose AI behavior in production environments.

It combines traditional observability practices (logs, metrics, traces) with machine learning–specific data such as:

- model predictions and confidence levels,

- input data distributions,

- performance drift over time,

- explainability metrics, and

- user feedback loops.

In short: while DevOps observability tells you what happened, AI observability tells you why the model did it.

3. Why AI in Production Fails Without Observability

1. Data Drift

Models are trained on historical data, but real-world data constantly changes. When input distributions shift, predictions become unreliable — sometimes without any visible error message.

2. Concept Drift

Even if input data looks the same, relationships within it can change. A recommendation engine that worked last month may perform worse when user preferences evolve.

3. Black-Box Decisions

Without visibility into how a model arrives at a decision, debugging and accountability become impossible.

4. Ethical and Legal Risks

Biases in AI can emerge or worsen over time, creating compliance and fairness issues that go unnoticed without monitoring.

4. Core Components of AI Observability

A. Data Monitoring

Continuous tracking of input and output data distributions ensures that data quality remains consistent.

Tools like WhyLabs or Evidently AI can automatically detect anomalies or missing features in real time.

B. Model Performance Metrics

Accuracy, precision, recall, and F1 scores are no longer enough. In production, you also monitor:

- Latency (inference time),

- Throughput,

- Confidence calibration,

- Prediction stability over time.

C. Drift Detection

Statistical methods (e.g., KL Divergence, PSI) help compare live data with training data to detect drift before it impacts users.

D. Explainability and Transparency

Using frameworks like SHAP, LIME, or Captum, teams can understand why a model made a decision — crucial for debugging and compliance.

E. Feedback Loops

Collecting real-world feedback (user corrections, click behavior, satisfaction ratings) allows models to self-correct and improve continuously.

5. Tools and Platforms for AI Observability

Modern AI ecosystems offer both open-source and enterprise-grade observability tools.

Open Source:

- Evidently AI – Drift detection and data visualization.

- Arize AI – Model monitoring and embedding analysis.

- WhyLabs – Automated data observability with Python integration.

- Weights & Biases – Experiment tracking and live model dashboards.

Enterprise Solutions:

- Fiddler AI, Arthur, Datadog ML, Amazon SageMaker Model Monitor – Complete platforms for ML observability, alerting, and governance.

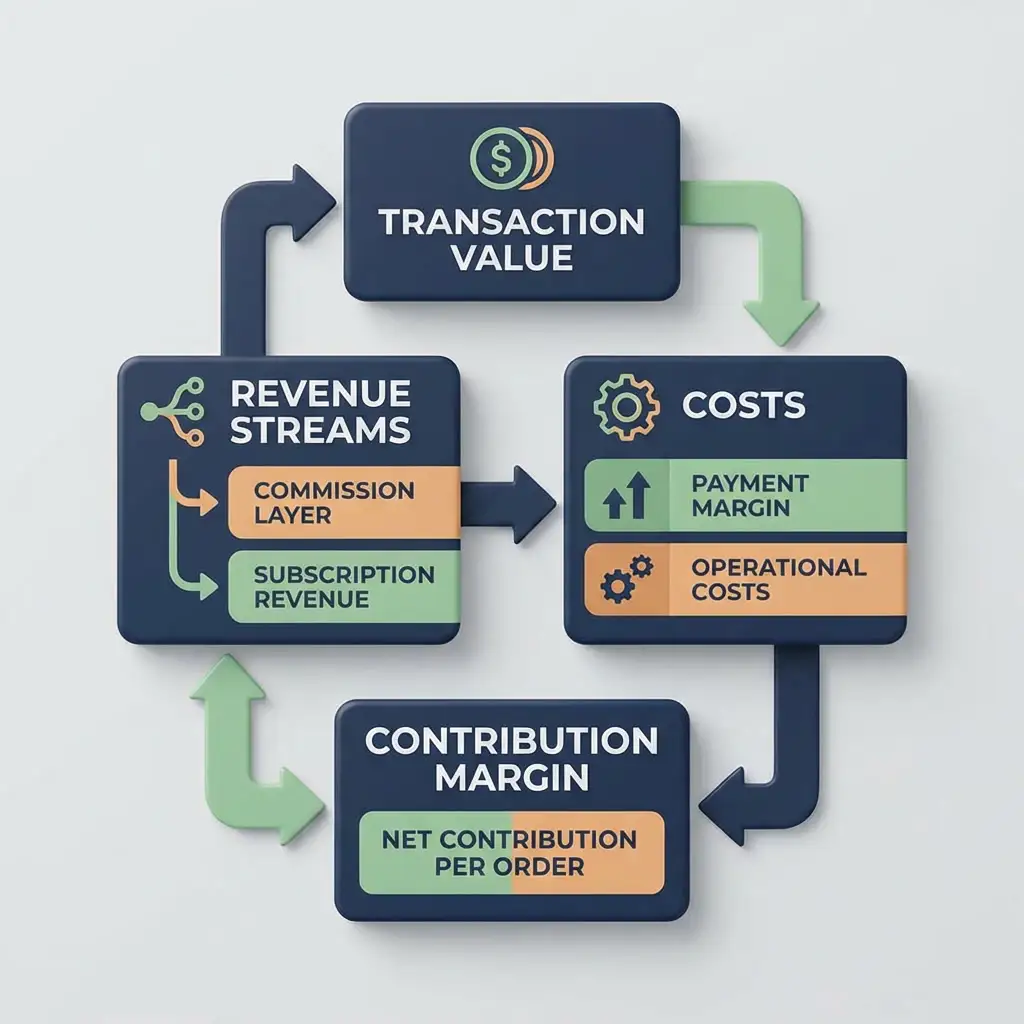

6. Architecture of an Observable AI System

A robust AI observability pipeline includes:

- Data Pipeline Observability – Track every transformation from raw input to model-ready data.

- Model Observability Layer – Log predictions, confidence levels, and versioning info.

- Monitoring and Alerting – Detect anomalies, outliers, and latency issues.

- Feedback Integration – Capture user outcomes and feed them back for retraining.

- Visualization Dashboards – Aggregate insights into actionable, real-time analytics.

These layers work together to ensure that when something breaks — whether it’s data, logic, or infrastructure — engineers can detect and fix it fast.

7. Case Study: AI Observability in a Marketplace Platform

Imagine a marketplace that uses machine learning for dynamic pricing.

Initially, the model performs well, optimizing prices across thousands of listings. But over time:

- new vendors join,

- product categories expand,

- and user behavior shifts due to seasonality.

Without observability, the model starts producing inaccurate predictions — some items overpriced, others underpriced.

With observability in place:

- Drift detection triggers alerts when data patterns shift,

- Feedback loops collect vendor responses (“too expensive”/“too cheap”),

- Automated retraining updates the model weekly,

- Explainability tools identify that changes in “vendor rating” influenced errors.

Result: pricing remains adaptive, transparent, and profitable.

8. Beyond Monitoring: AI Governance and Ethics

Observability also ties into AI governance — ensuring responsible, fair, and explainable use of algorithms.

A good observability setup can help detect:

- Bias propagation – unfair model treatment of specific groups.

- Unintended correlations – e.g., models predicting income from ZIP codes.

- Regulatory breaches – noncompliance with GDPR or upcoming EU AI Act.

Thus, observability is not just a technical feature — it’s a trust layer for ethical AI.

9. Implementing AI Observability Step by Step

- Start small – pick one critical model and instrument it for monitoring.

- Collect the right signals – accuracy alone isn’t enough; log inputs, outputs, and confidence levels.

- Visualize metrics – dashboards give context, not just numbers.

- Automate alerts – detect drift or anomalies proactively.

- Integrate feedback loops – learn from real-world corrections and user behavior.

- Iterate – continuously refine both model and monitoring pipelines.

The goal is to make observability part of the MLOps lifecycle, not an afterthought.

10. The Future of AI Observability

By 2030, observability systems will be self-adaptive — automatically diagnosing model failures and initiating retraining cycles.

AI will monitor AI:

- detecting anomalies autonomously,

- explaining its own decisions,

- and maintaining ethical boundaries through embedded governance rules.

In a world of autonomous systems, trust will be the new uptime, and observability will be the foundation of that trust.

Conclusion

AI observability is becoming as critical to machine learning as testing is to traditional software.

It ensures not just that models run — but that they run right.

For companies deploying AI-driven applications or marketplaces, observability means:

- fewer failures,

- faster iterations,

- and more confidence in decisions that shape user experience.

As AI continues to evolve, one truth becomes clear: the smarter the system, the greater the need to observe it.

Przeglądaj inne artykuły

AI-Driven Marketplaces: Real-Time Offer Matching as a Competitive Advantage

Category Depth vs Category Breadth: The Real Economics Behind Marketplace Expansion

Liquidity Is Not Volume: The Structural Mistake That Kills Marketplaces

The Take Rate Trap: Why Raising Commissions Is the Fastest Way to Kill a Marketplace

When to Fire Sellers: Why the Best Marketplaces Grow Faster by Shrinking Supply

Marketplace Support Costs: The Hidden Margin Killer No One Models

Tiered Pricing Without Backlash: How to Monetize Sellers Without Killing Growth

Seller Segmentation: The Missing System Behind Profitable Marketplaces

Why Most Marketplaces Die at €1–3M GMV (And How to Avoid It)

Marketplace Unit Economics: When Growth Actually Becomes Profitable

How High-Margin Marketplaces Actually Make Money (Beyond Commissions)

Algorithmic Middle Management: How Software Replaces Control Layers

The Rise of Internal Software: Why the Most Profitable Digital Products Are Built for Companies, Not

Decision-Centric Software: Why the Real Value of Digital Products Is Shifting from Features to Decis

Software That Never Launches: Why Continuous Evolution Is Replacing Releases and Roadmaps

Digital Products Without Users: When Software Works Entirely Machine-to-Machine

Unbundled Platforms: Why the Future of Digital Products Belongs to Ecosystems, Not Single Applicatio

Silent Software: Why the Most Valuable Digital Products of the Future Will Be the Ones Users Barely

Cognitive Commerce: How AI Learns to Think Like Your Customers and Redefines Digital Shopping

Predictive UX: How AI Anticipates User Behavior Before It Happens

AI-Driven Product Innovation: How Intelligent Systems Are Transforming the Way Digital Products Are

Adaptive Commerce: How AI-Driven Systems Automatically Optimize Online Stores in Real Time

Zero-UI Commerce: How Invisible Interfaces Are Becoming the Future of Online Shopping

AI Merchandising: How Intelligent Algorithms Are Transforming Product Discovery in Modern E-Commerce

Composable Commerce: How Modular Architecture Is Reshaping Modern E-Commerce and Marketplace Develop

Context-Aware Software: How Apps Are Becoming Smarter, Adaptive, and Environment-Responsive

AI-Driven Observability: The New Backbone of Modern Software Systems

Hyper-Personalized Software: How AI Is Creating Products That Adapt Themselves to Every User

Edge Intelligence: The Future of Smart, Decentralized Computing

AI-Powered Cybersecurity: How Intelligent Systems Are Redefining Digital Defense

Modern Software: How Our Company Is Reshaping the Technology Landscape

From Digital Transformation to Digital Maturity: Building the Next Generation of Tech-Driven Busines

AI Agents: The Rise of Autonomous Digital Workers in Business and Software Engineering

Synthetic Data: The Next Frontier of AI and Business Intelligence

Quantum AI: How Quantum Computing Will Redefine Artificial Intelligence and Software Engineering

Design Intelligence: How AI Is Redefining UX/UI and Digital Product Creativity

How Artificial Intelligence Is Transforming DevOps and IT Infrastructure

Low-Code Revolution: How Visual Development Is Transforming Software and Marketplace Creation

Composable Marketplaces: How Modular Architecture Is the Future of Platform Engineering

AI-Powered Storyselling: How Artificial Intelligence Is Reinventing Brand Narratives

The Era of Invisible Commerce: How AI Will Make Shopping Disappear by 2030

From Attention to Intention: The New Era of E-Commerce Engagement

Predictive Commerce: How AI Can Anticipate What Your Customers Will Buy Next

Digital Trust 2030: How AI and Cybersecurity Will Redefine Safety in the Digital Age

Cybersecurity in the Age of AI: Protecting Digital Trust in 2025–2030

The Future of Work: Humans and AI as Teammates

Green IT: How the Tech Industry Must Adapt for a Sustainable Future

Emerging Technologies in IT: What Will Shape 2025–2030

Growth Marketing – A Fast-Track Strategy for Modern Businesses

AI SEO Tools – 5 Technologies Revolutionizing Online Stores

AI SEO – How Artificial Intelligence Is Transforming Online Store Optimization

Product-Led Growth – When the Product Sells Itself

Technology in IT – Trends Shaping the Future of Business and Everyday Life

Marketplace Growth – How Exchange Platforms and E-commerce Build the Network Effect

Edge Computing – Bringing Processing Power Closer to the User

Agentic AI in Applications – When Software Starts Acting on Its Own

Neuromorphic Computers and 6G Networks – The Future of IT That Will Change the Game

Meta Llama 3.2 – The Open AI That Could Transform E-Commerce and SEO

AI Chatbot for Online Stores and Apps – More Sales, Better SEO, and Happier Customers

5 steps to a successful software implementation in your company

Innovative IT solutions — why invest now?

Innovative software development methods for your business

5 steps to successfully implement technological innovation in your company